Background

Extended Reality (XR) is a newly added term to the dictionary of the technical words embracing the terms Virtual Reality, Mixed Reality and Augmented Reality. We define Virtual Reality (VR) as an approach to ‘‘completely integrate the user into a computer-generated environment.’’ This is important to distinguish VR from the concept of Mixed Reality (MR). We furthermore do not define MR as ‘‘the subfield of VR’’ but rather as a concept that spatially correlates physical and virtual space ‘‘by merging images with a scene or object behind the display.’’ Milgram’s and Kishino’s popular reality continuum further outlines the reality–virtuality conversion between AR and MR. They define MR as anything between the ‘‘real world’’ and VR, whereas Augmented Reality (AR) is described as depicting virtual objects in the real world, which for them forms a part of MR.

Although this definition has been widely acknowledged and has also been taken up by market leaders such as Microsoft or Magic Leap to communicate their marketing strategies, it does not grasp the distinction between AR and MR. It may even be misleading in the way that suggests an understanding of MR as integrating various levels of reality. Even though MR and AR share substantial technological features and design strategies, they imply separate activities and suggest use cases with a different scope.

Schematic illustrations of vision through head-mounted displays for Virtual Reality (A) and Mixed Reality (B).

Head Mounted Displays in Surgery

So far, Head Mounted Displays (HMD) were bulky, heavy devices and their displays had an inadequate resolution. Furthermore, hiding the real world Virtual Reality HMD are not allowing the surgeon to directly see the surgical site and(or the co-workers in the operation room: This limited their use significantly.

The recent availability of high-resolution displays, tremendously faster processors, and – most importantly – the development of Mixed Reality technology will dramatically change the way surgeons might use this technology in the future.

Together with the Interdisciplinary Laboratory Image Knowledge Gestaltung at the Humboldt-Universität zu Berlin we are currently working on

- Preoperative surgical planning in visceral surgery (VR, MR),

- use of Mixed Reality systems in the operating room (MR assisted surgery, tele-consulting & mentoring) and

- Training of surgical technicians via 360° videos and immersive 3D spaces.

Mixed Reality in Surgical Practice

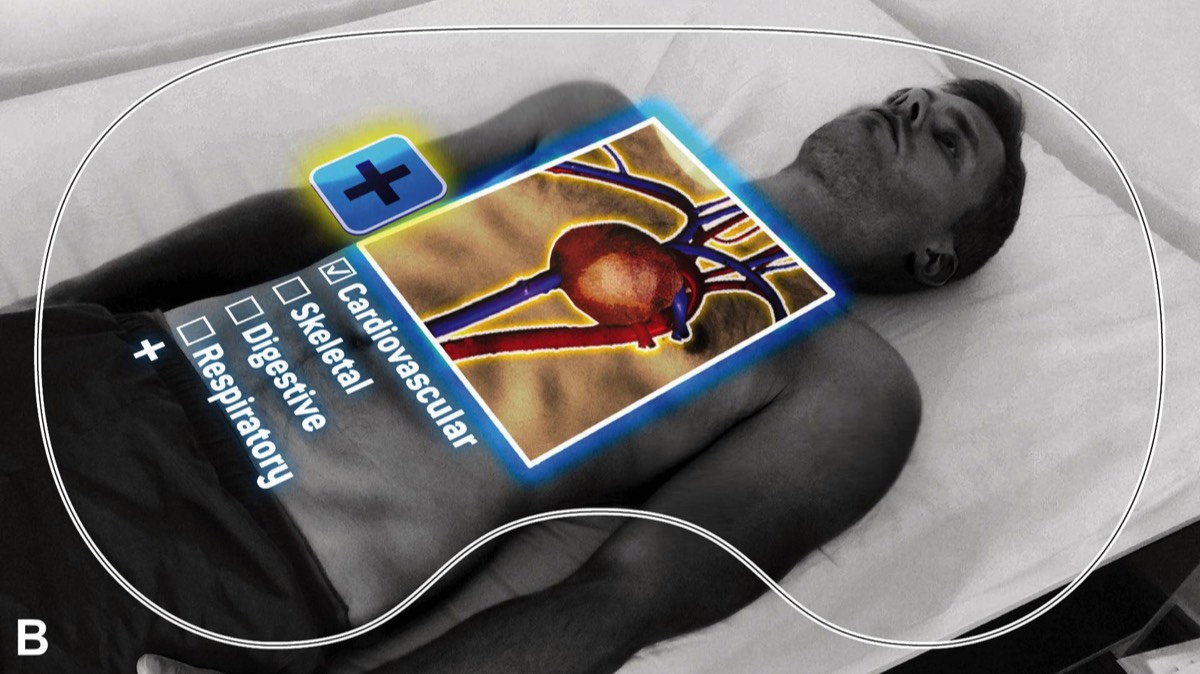

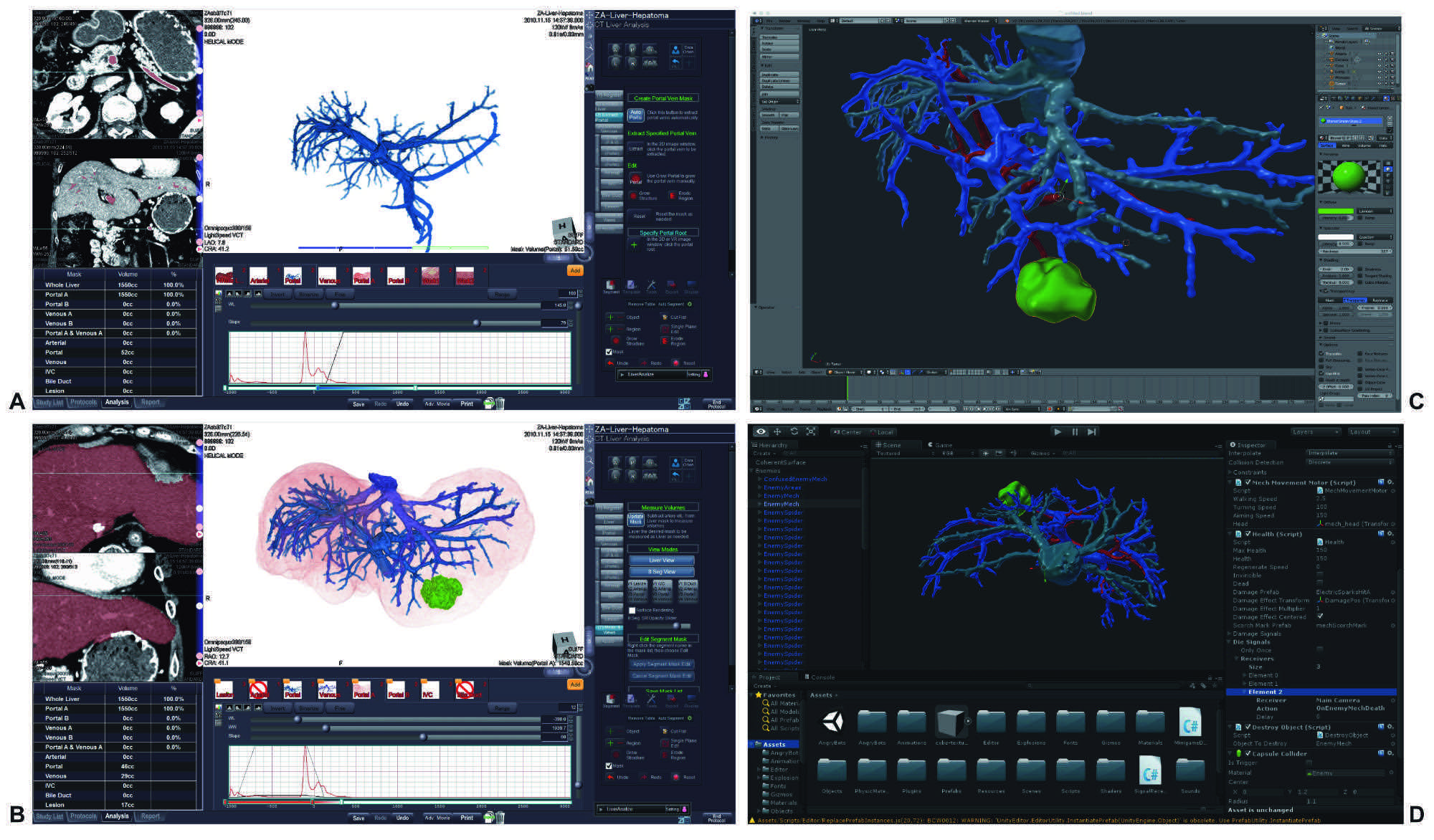

In order to convert conventional imaging formats to three-dimensional objects for visualization with a MR-HMD as well as to optimize those models for an intraoperative workflow, different software solutions for medical image computing are required for the necessary work steps. We compared the open source software platform 3D Slicer 4.6 , and Ziostation2 (Ziosoft Inc., Tokyo, Japan) including its CT Liver Analysis protocol in order to segment image masks of the hepatic artery, portal vein and hepatic vein using computer tomography data). Both applications allow for the export of STL files – a stereolithography format supported by many other software packages. After the segmentation for three-dimensional display we imported these files to Blender 2.78a (Blender Foundation, Amsterdam, The Netherlands) for texture mapping, i.e. defining the surface texture and color information on the 3D model. Using the cross-platform game engine Unity 5.5.2 (Unity Technologies, USA) the polygonal models were processed as FBX files with all texture maps embedded to be able to be displayed and and used in a three-dimensional scene. Finally the software 3D Viewer beta (Microsoft Corp., USA) was used to open the files in Microsoft’s HoloLens MR HMD. It enables stable positioning of the 3D object relative to surfaces in the room, as well as moving, resizing and rotating (horizontal axis).

Screenshots of post-processing the computer-tomography data with Ziosoft 2 reconstructing the anatomy of the portal vein (A), a volume rendering of hepatic artery, portal vein, hepatic veins, tumor and liver capsule (B), defining the surface texture and color information on the 3D model (Blender, C), import of data into Unity game engine (D).

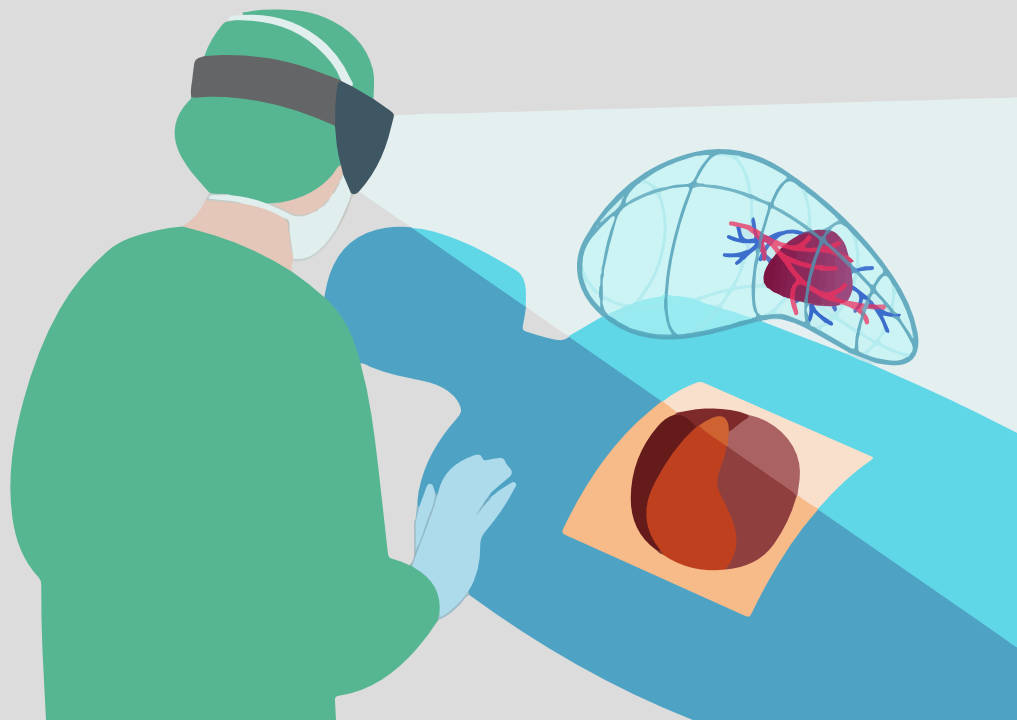

To evaluate the HoloLens during visceral surgery, a patient-specific model was wirelessly transmitted into the HMD worn by the first assistant surgeon. The adjustment wheel in the headband ensured a comfortable fit for a wide range of adult head sizes. In usability testing satisfactory comfort wearing the very well balanced device (weight: 579 g) without significant impairment of movement was reported by all users. Surgeons wore the device for up to 1 hour, usually only during the dissection of the liver paren- chyma lasting between 30 and 40 minutes. The model showing the vascular anatomy of the patient’s liver was positioned above the thorax over the situs in front of the sterile drape separating the workspace from the anesthesiologists. This location proved to have no interference with line of sight and enabled effortless viewing, simply by looking up. Reflections or unwanted incidence of light through the visor of the HMD were minimal. Despite the multitude of technical equipment and the reflecting metal and glass surfaces in the OR, positioning of virtual objects in the room was very stable without drift (ie, virtual object appears to move away from where it was originally placed) and only minimally impaired by jittering, a high frequency shaking of the 3D object. Positioning the 3D object enabled an unobstructed view on the operating site. The slight tinting of the visor and the 3D image rendering Wavequide screens did not substantially impair visibility.

For more information please refer our publication in Annals of Surgery.

Schematic illustrations of positioning the liver model during surgery. Left (mobile top) above the operating site. Right (mobile bottom) overlaying the liver model in the operating site. © Michael Pogorzhelskiy

Virtual Reality for Surgical Education

Participating during surgery is essential in gathering experience in surgical training. Assisting during surgery and applying acquired theoretical knowledge on an actual patient can only be scaled so far. Therefore the learning curve tends to follow an exponential curve, only rising once knowledge is actually applied in practice.

To enable new learning methods in the field of surgery and reduce the risks for patients and medical personnel, the project Volumetric OR is currently in development in cooperation with the Interdisciplinary Laboratory Image Knowledge Gestaltung at the Humboldt-Universität zu Berlin. The Volumetric OR currently explores the requirements for VR-based simulation of surgical use-cases and their environments. Our aim is to provide a representation of the complex operating room interactions of surgeons, anesthesiologists, and surgical technicians.

The VR application is based on a photogrammetrically reconstructed 3D model of an operating room at the Charité - Universitätsmedizin Berlin. Users can experience the operating room using a VR HMD (e.g. HTC Vive) in realistic dimensions and toggle metadata for key aspects of the environment. To display the time-critical workflow during surgery, volumetric video of a required surgery is integrated into this environment. Volumetric video differs fundamentally from widely known 360° videos as it can actually display image information from any angle for each time point, using depth information gathered from multiple Microsoft Kinect cameras, making it truly usable in virtual reality.

Our Team

Igor M. Sauer

Principal investigator & Surgeon

Moritz Queisner

Media Studies

Christoph Rüger

Medical Technology

Simon Moosburner

Postdoc & Surgeon

Peter Tang

Technician

Brigitta Globke

Postdoc & Surgeon

Christopher Remde

Game Design

Michael Pogorzhelskiy

Interaction Design