We warmly invite you to the opening of

»Vessels. Infrastructures of Life« at the Berlin Museum of Medical History at the Charité (bmm), a group exhibition curated by Igor M. Sauer and Navena Widulin with contributions by Assal Daneshgar, Emile de Visscher, Frédéric Eyl, Karl Hillebrandt, Eriselda Keshi, Dietrich Polenz, Moritz Queisner, Iva Rešetar and Igor M. Sauer.

Vernissage Wed, 4 June 2025, 7:00 - 10:00 pm

Exhibition 5 June – 12 October 2025

Tue, Thu, Fri, Sun, 10:00 am - 5:00 pm

Wed, Sat, 10:00 am - 7:00 pm

Closed on Mondays

VenueBerliner Medizinhistorisches Museum der Charité (bmm)

Virchowweg 17

10117 Berlin

What do plants, animals, humans and cities have in common? They all have vascular systems and, therefore, an infrastructure without which they would not be able to survive.

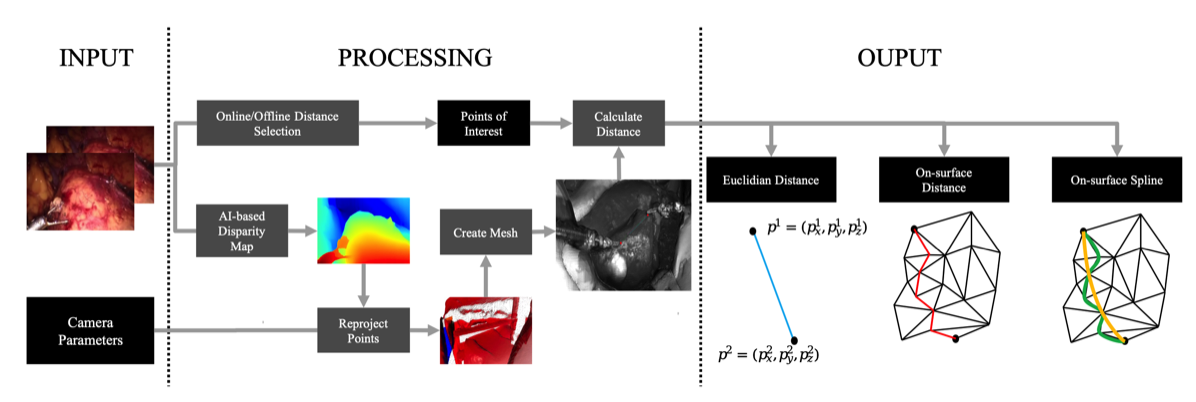

In the human body, arteries and veins move the blood together with the heart. Plants have a finely branched vascular system for the transport of water and nutrients. And cities utilize an underground network of pipelines that supply clean water and remove wastewater. The temporary exhibition, co-curated by Igor Sauer and Navena Widulin, shows how these vessels function and how they can be visualized, used and reproduced.

What can medicine learn from these natural and technical supply systems? What role does the interdisciplinary view – between biology, design, materials research and medical technology – play for regenerative medicine? And what innovative approaches can be derived from this for the development of artificial and bioartificial donor organs?

»Vessels. Infrastructures of Life« provides insights into the work of designers, material scientists and surgical researchers who are working together on solutions for the future – inspired by nature, technology and the logic of living systems. From exhibits on transplantation and regenerative medicine to examples of architecture and design, the exhibition offers exciting insights into these often-hidden structures. The objects on display correspond with those in Rudolf Virchow’s historical collection of specimens. A particular focus lies on the connections between natural vessels and human-made networks, such as the regulation of temperature in buildings or the water and wastewater supply in cities.

The temporary exhibition »Vessels. Infrastructures of Life« is a collaboration between the Berlin Museum of Medical History and the Experimental Surgery at the Charité and the Cluster of Excellence »Matters of Activity« of Humboldt-Universität zu Berlin as part of the

→ _matter Festival 2025.